Thoughts on WWDC 2023

Focusing on design, subtle things, and the implications of what was announced

Follow my WWDC 2023 thread with everything that was launched here:

https://twitter.com/jose_goncalves_/status/1665765307632287744?s=20

In the thread are the facts (mostly).

In this post are the opinions.

I don’t judge whether things are good or bad.

I only comment on what I feel like commenting.

I let my imagination and designer brain fly freely about what was shown.

Let’s cut to the interesting little details both in hardware and software.

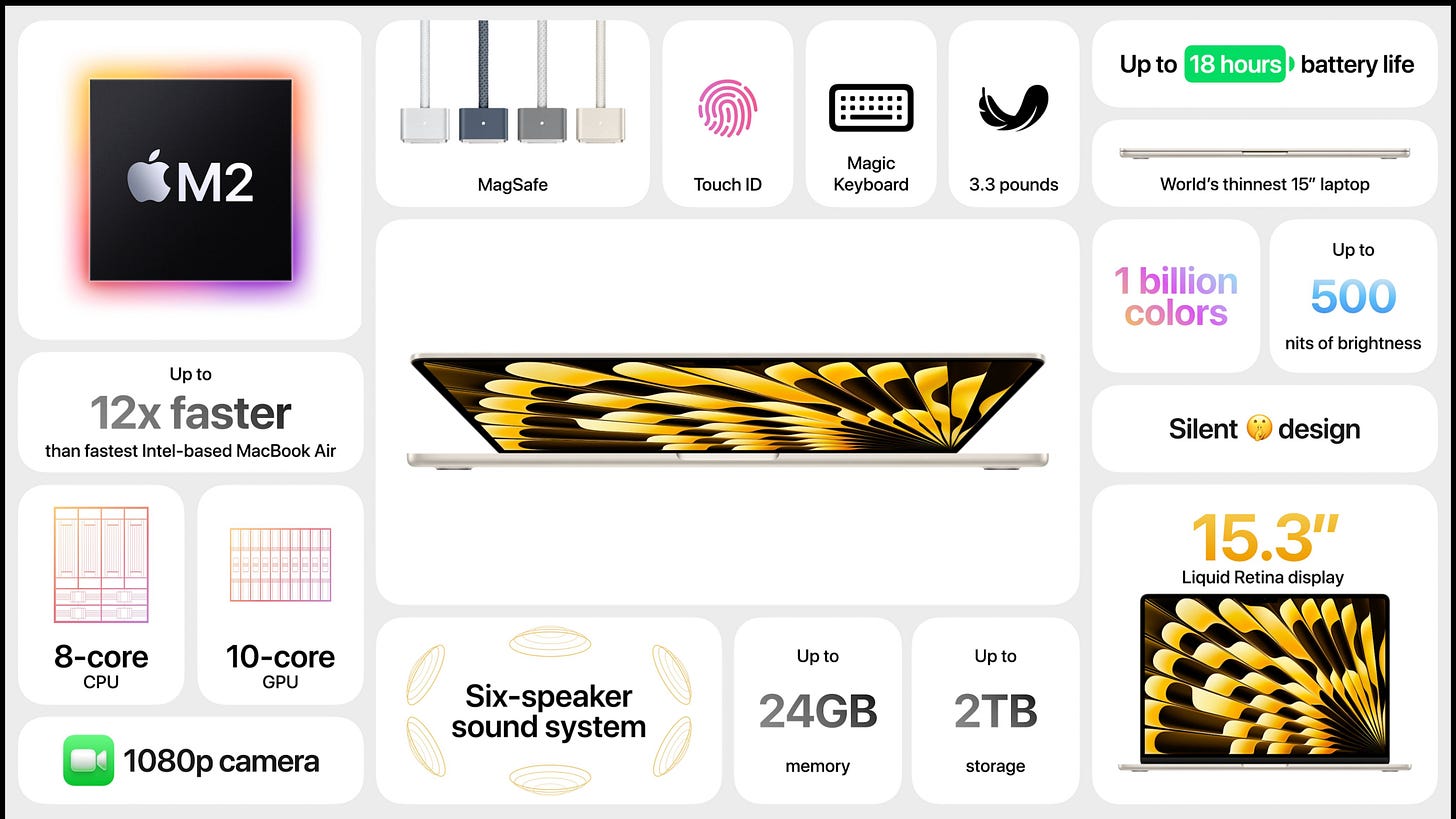

1. Macbook Air M2

From a market standpoint, the Macbook Air is now in what I will call the “DJI problem”, meaning it only competes with itself. At this point, unless you really hate Tim’s guts - or have some niche use case - there isn’t a rational reason to get an entry-level laptop other than the Macbook Air. The question is which Macbook Air.

Now in 15 inches and an M2, it feels to me like this laptop also eats the Macbook Pro like. Let’s admit it, most people who own Apple Silicon Macbook Pros don’t really need the extra power that they give. They send emails and Slack messages all day.

That is to say: This new laptop is - at least on paper - another exceptional one.

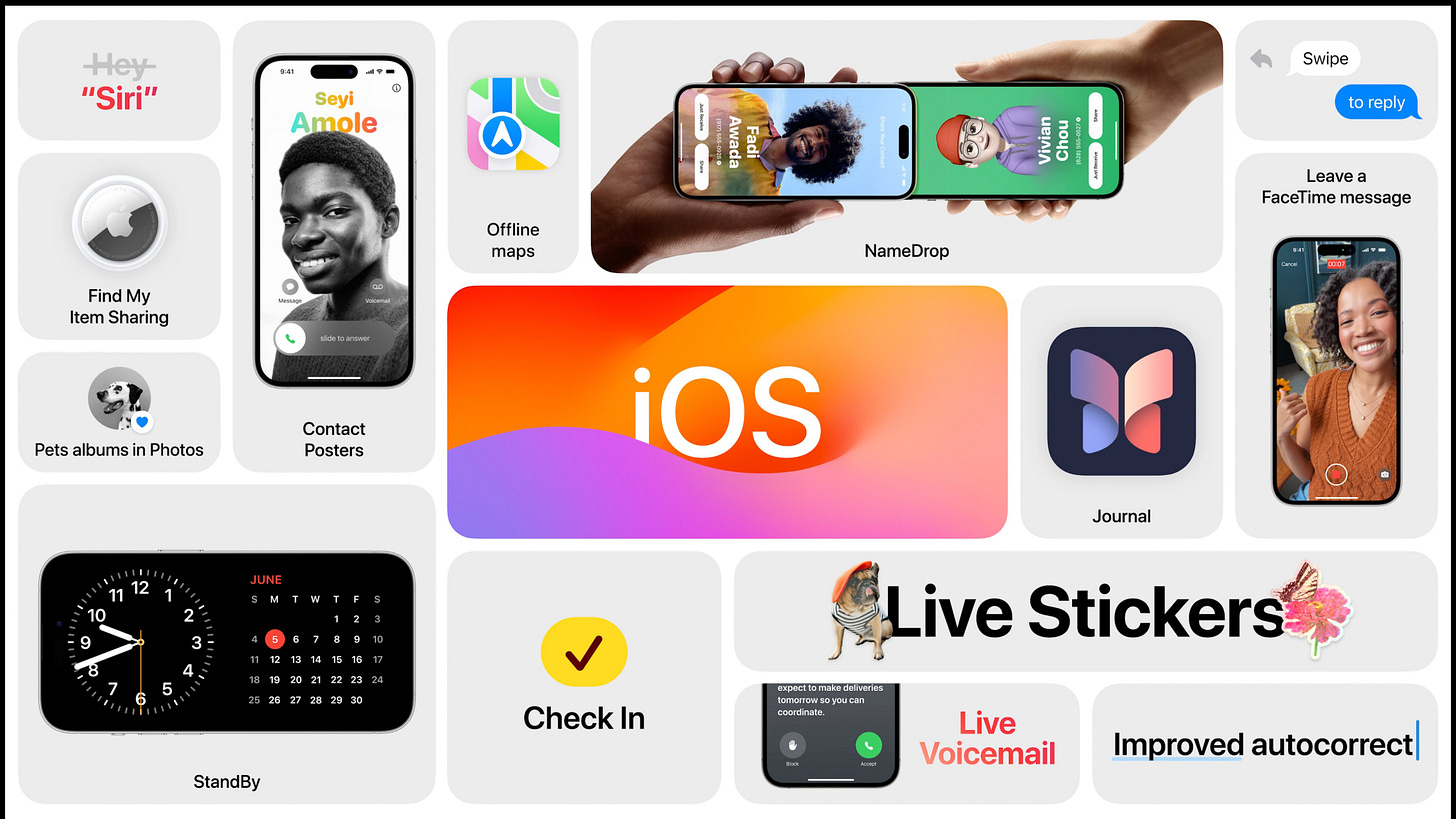

2. iOS17

No major leaps, but subtle improvements to the parts of our iPhones we use most often.

2.1. Phone App

Personalization

So basically they want to make your iPhone feel yours, likely to increase your sense of ownership over the phone. Cool.

Apple has been pushing in this direction for a while now. My gut is unsure of whether it is the right approach. Let me explain.

Look at iPhones 2G to 5 home and lock screens.

Now look at these custom home and lock screens from iOS 16.

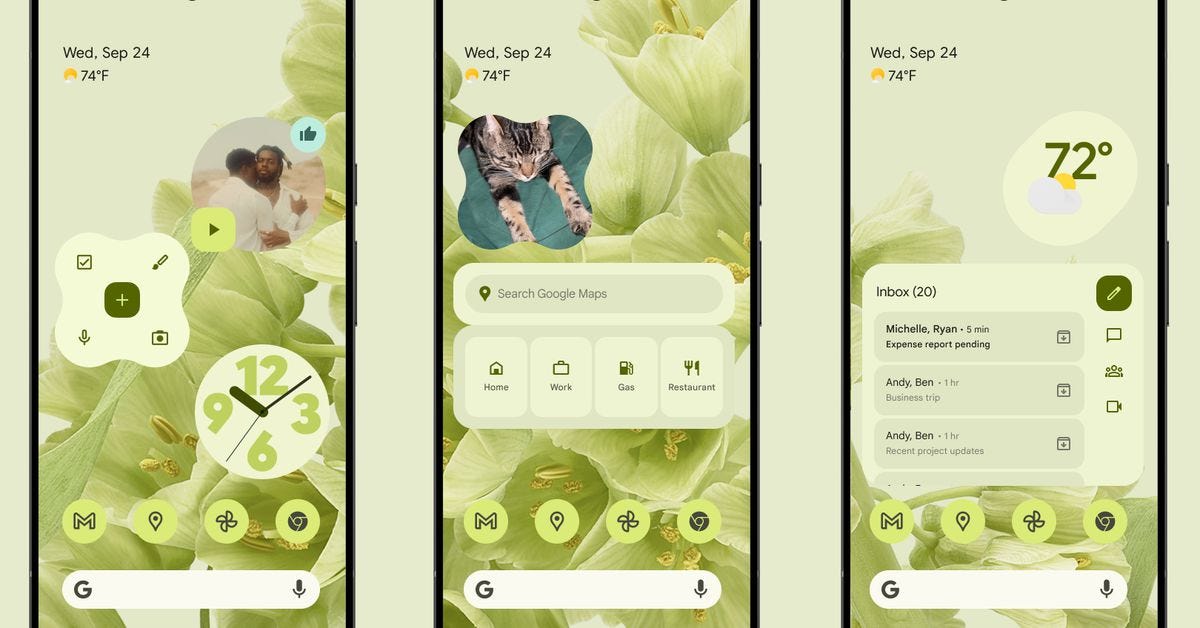

Now look at these Material You home and lock screens.

While personalization might seem great at first sight, it comes at the cost of control. For a company that relies so much on its products being iconic, losing total control over the look of its software isn’t a trivial thing.

Sure, there is still an extremely well-crafted design system that allows you to distinguish an iPhone from an Android, but the line is getting thinner.

While seeing someone walk on the street from a distance, you probably couldn’t tell a personalized iOS home screen from a Material You home screen. These things matter.

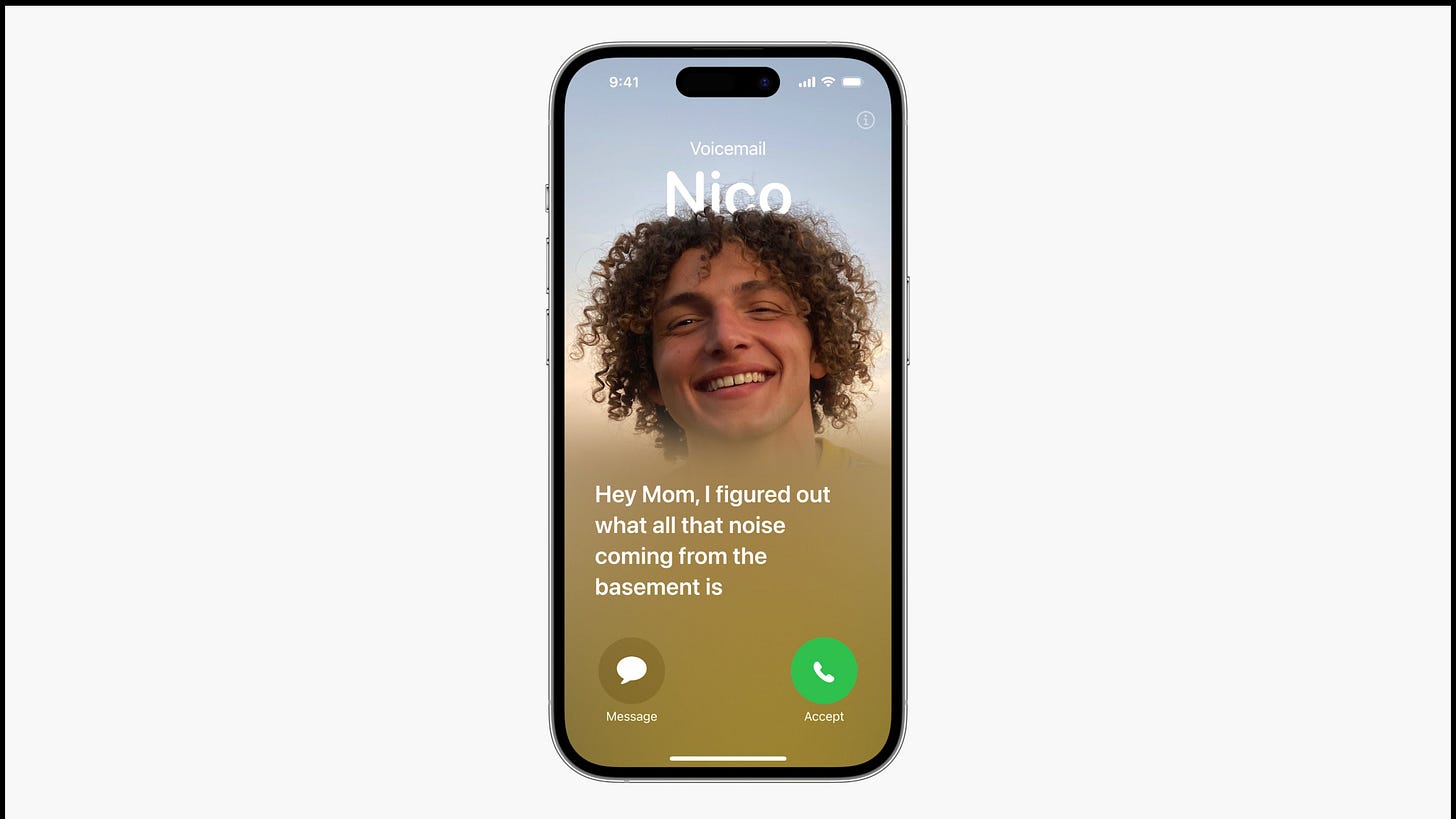

While the new call screen keeps the iconic slider on the bottom, that’s most of what is left. The font and even the position of the letters change between screens, the contrast levels are all over the place, and these are best-case scenarios. Never underestimate how ubly a user can make things look.

I am not advocating for Apple to have total control over the designs, but if they keep following this trend - custom widgets, icons, lock screen, phone book - then where will the trend stop? What will be iconic about it?

Status signaling

Personalized contacts will become a new massive status signal. Like emojis on names but on steroids. Nobody will go through the effort of customizing the contact of that cousin with whom they talk once a year, but they sure as hell will customize their boyfriend’s.

This status signal is especially impactful because it is visible to others.

Your friend left their phone on the table. Someone is calling him. Is the contact personalized? Is it a cute photo? Is it a Memoji? What expression is the Memoji doing? 😎🔥❤️🤡⭐️👯♀️

There is no way this goes noticed among the younger crowd.

Walled garden

This is exactly the kind of thing that prevents iOS users from ever leaving "Apple’s walled garden”. People won’t go through the effort of personalizing their whole contact list with photos and then dropping it for an Android.

Photo labeling

Going a level deeper: Might this be a clever push by Apple to make people label the people in their photos? The Photos app already does facial recognition of everyone, but won’t know who is who until you tell it. This might be a neat incentive for people to start labeling the faces on their Photos app. Once you tell your iPhone that Clara looks like X, and then it can see Clara on all your photos, it will be able to cross-analyze the frequency of your photos, messages, and calls with Clara to give you better Automatically generated memories, for example. (there certainly are dozens of better use cases for this data. This was the one that came to mind first)

Call screening

“Live Voicemail” seems to be an incredibly clever way to do call screening without relying on faulty AIs. It eliminates the behavior of sending a message saying “urgent” before calling.

Maybe it might also create a whole new medium of communication (?)

Imagine you want to send a message to someone and be sure they get it right now but not necessarily do anything about it. This could be it. Higher certainty of reception than a text message and less intrusive than a call they have to pick up.

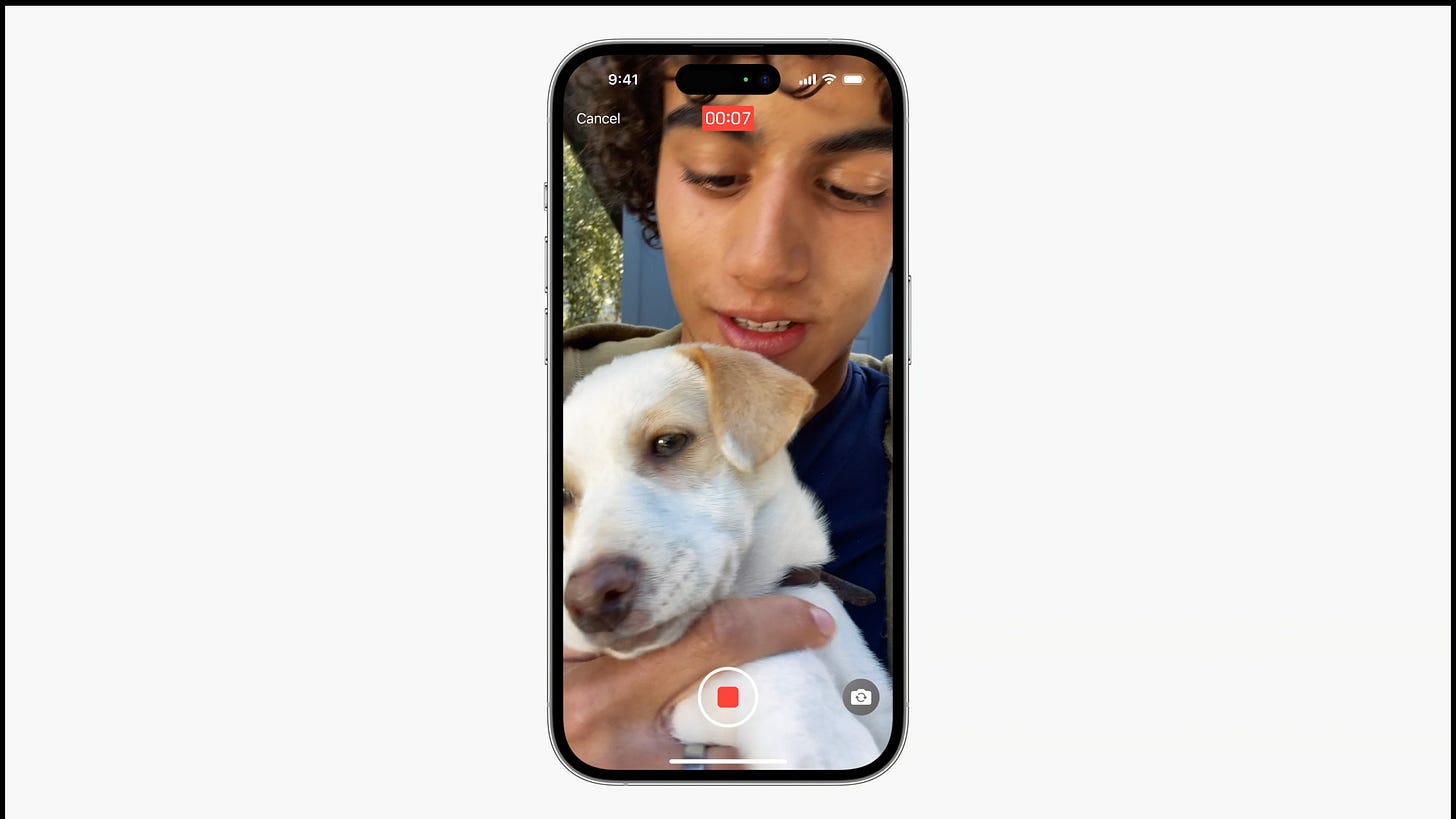

Video messages

It’s weird that this one didn’t exist in the first place. It’s a video message after you try to video-call someone. No-brainer. Yet somehow it wasn’t the default choice up until now.

I predict this will increase engagement in iMessage - assuming the video message is sent there - because it increases context on missed calls. The conversation will go from “I couldn’t pick up, what did you want to show?” straight to talking about the content of a video message. Similar to how Instagram Stories are top of the funnel for DMs, missed video calls with attached video messages can now be a much stronger conversation starter. By default.

When the other person doesn’t pick up is also the perfect moment to ask a user to send a video message. They are already being filmed. They already know they look good. They already know what they were gonna say. There is very little friction left.

2.2. iMessage

Little things that improve the experience

Semantic search of texts - pretty cool a probably a step closer to being able to semantically search anything you ever said;

Audio message transcription - amazing because some people love audio messages while others hate them. These people can now happily talk to each other;

Check-in - not a big deal for now but can easily see it evolve into something more social. Sharing battery info, location, and status based on location is something people want, as proved by Zenly, but right now they are only using it for safety.

Better auto-correct and Dictation - a sprinkle of safe AI here and there. I like how they didn’t try to make weird stuff and stuck to “making this the best platform to type on”

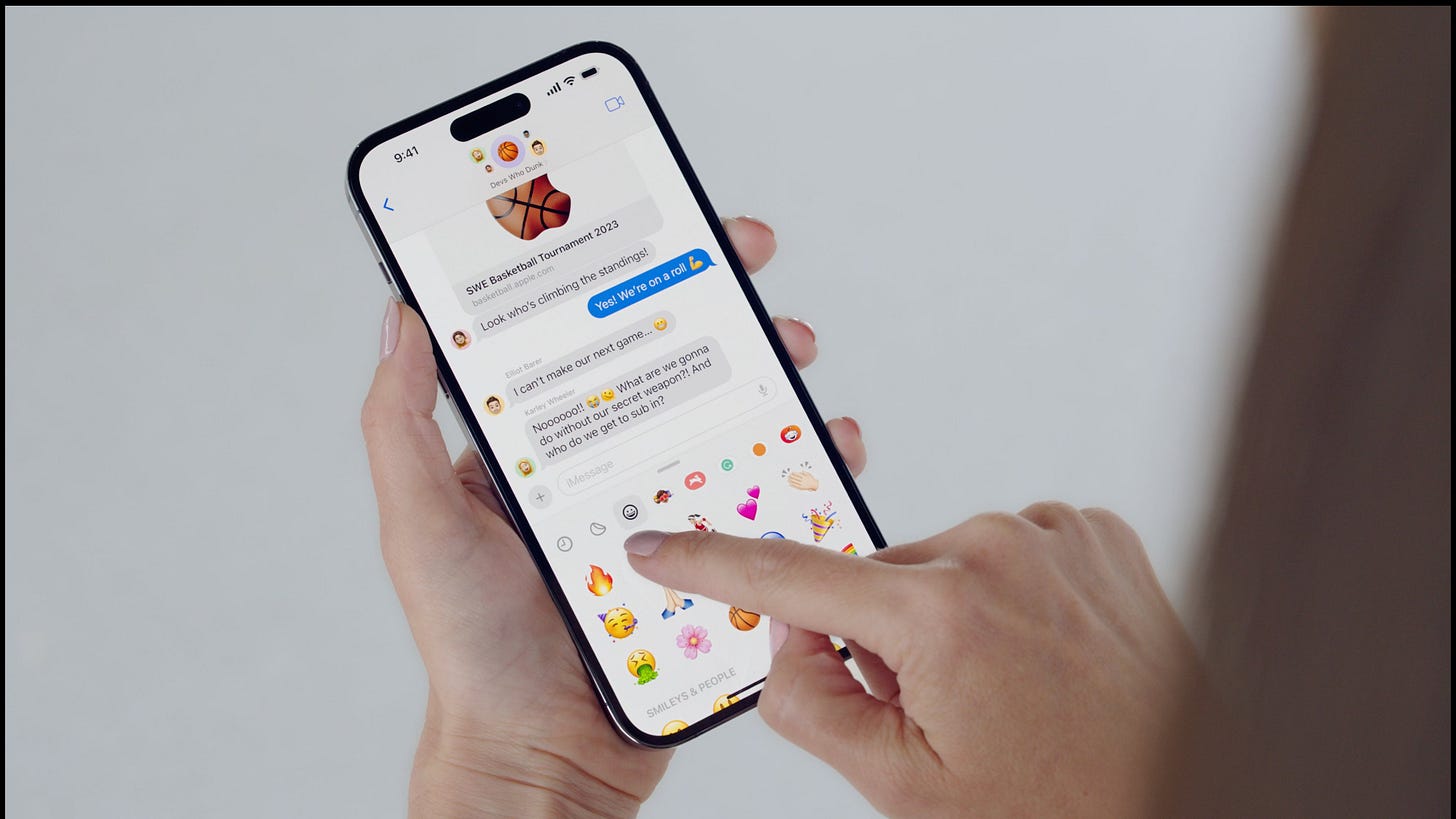

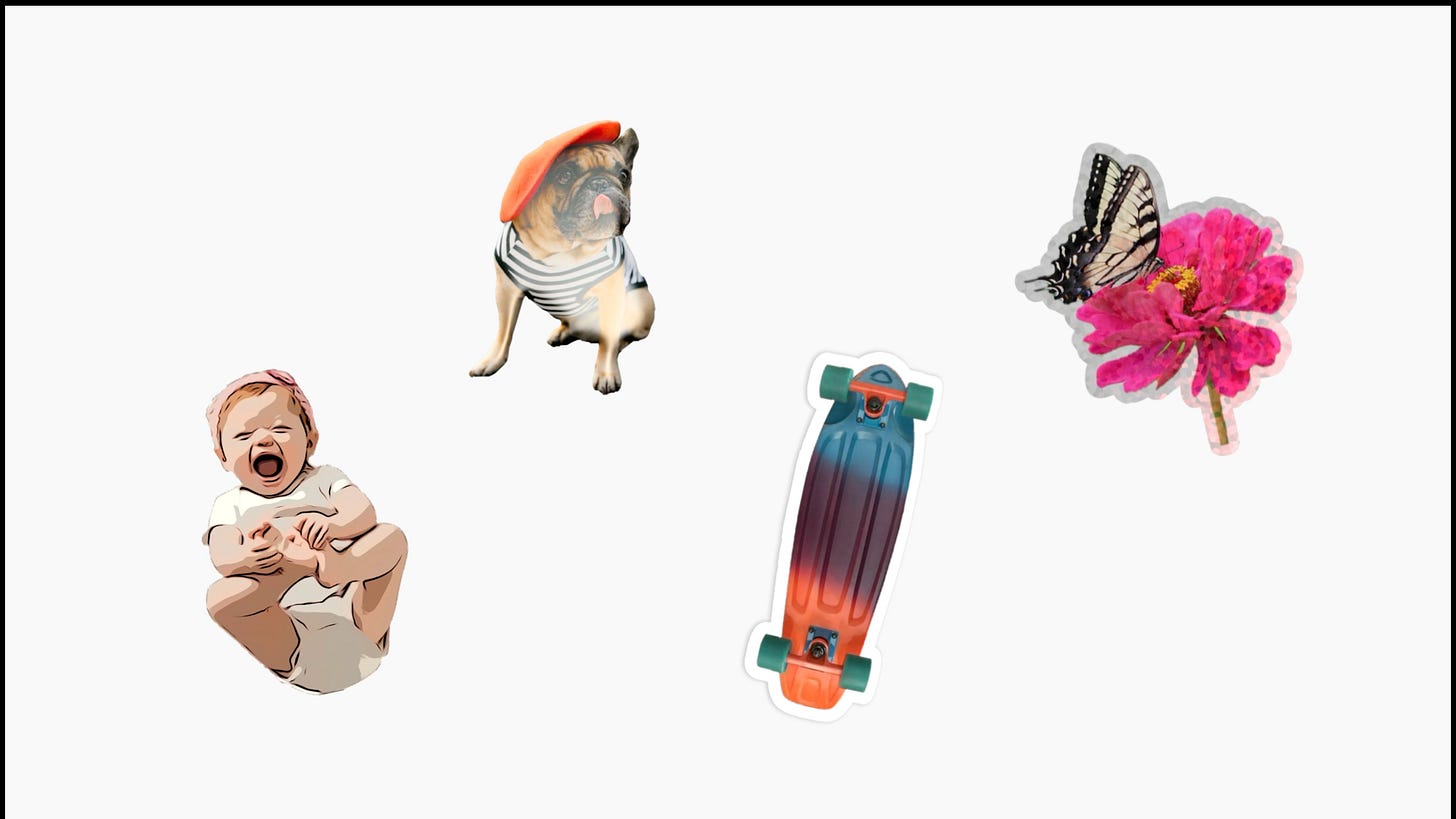

Stickers

Another example of “we do it late af, but fo it properly” from Apple.

Who needs new UI to place stickers when you can drag and stick them anywhere?

Who needs an app to make stickers when you can hold on any photo and make them that way?

Oh, have we mentioned that they are holographic? and video? and work everywhere?

Obviously haven’t tested it yet, but this seems like a masterful play from Apple in an area of major importance: Self-expression. Going back to my rant earlier about customization and being iconic, this has the potential to be an incredibly customizable tool while also being iconic. The holographic effect makes iPhone stickers stand out from others.

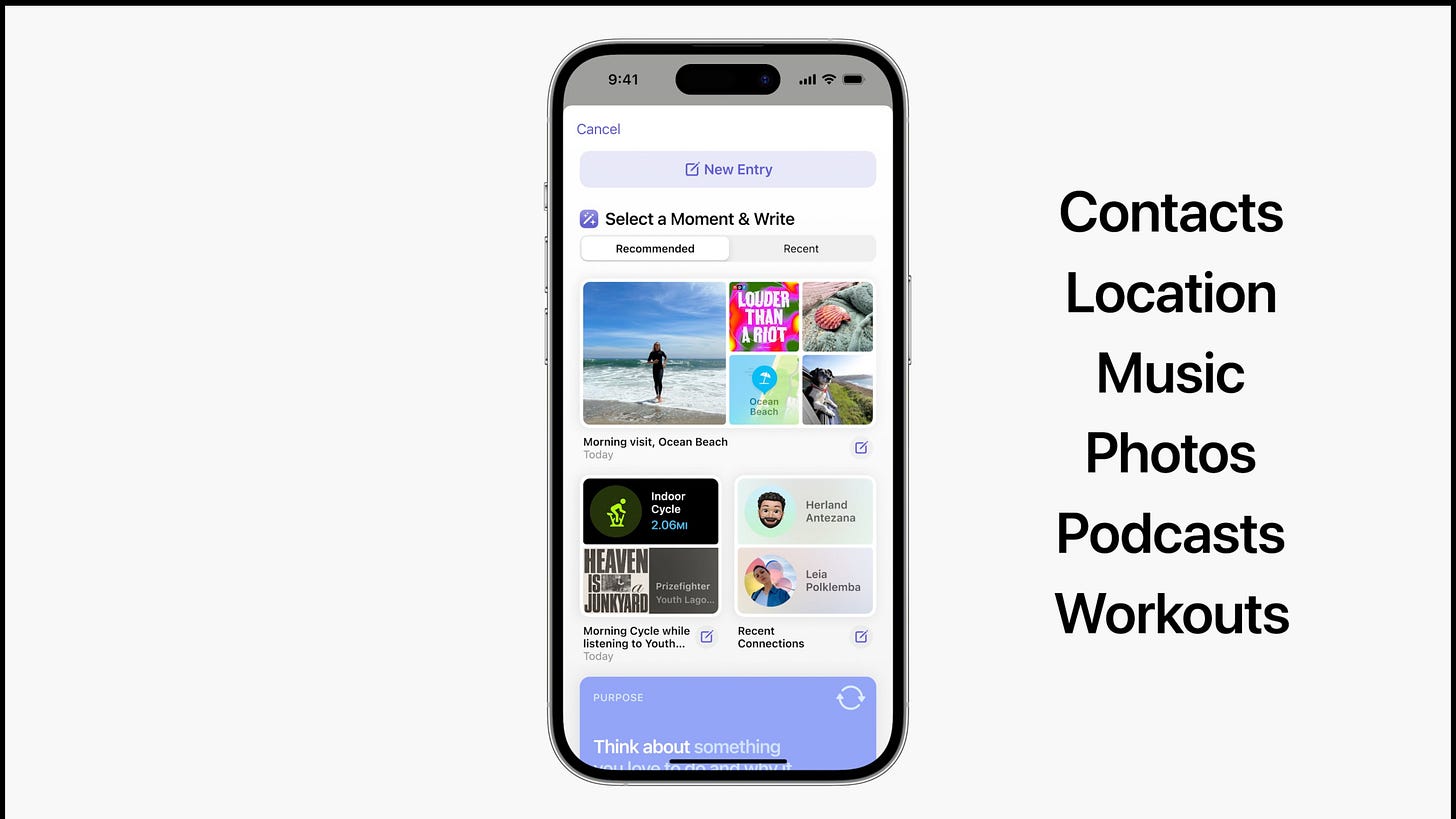

2.3. Journal - Apple’s own journaling app

Mixed feelings about the approach.

A very low number of people actually journal.

What I think will happen here is Apple essentially automatically journalling for people, whether they want it or not.

If they have all this info on the user on a daily basis, what is there left to do? Press “accept”?

There must be a bigger play here. If there wasn’t, I don’t think Apple would go through the hassle of making a standalone journaling app that - if not pre-filled by default - very few people will actually use.

Maybe it’s just another brick in the garden’s wall? Who will want to leave after having their whole life documented there?

My 2 takeaways are:

This will be the best journaling app out there dues to the data it has access to;

It must be part of a bigger future play.

2.4. NameDrop - Sharing info by touching devices

This is big. It is a new physical gesture. A new ritual. A new communication channel.

You can now play catch with iPhones and register who you have already caught.

You can now share Pokemons or trading cards or anything else in digital games by actually being present and doing something physical.

You can now exchange contacts with someone without a single word.

You can now send malware on the metro in rush hours by being jammed against people.

You can now prove you know someone in real life.

You can now ask for locally shared digital autographs.

These are just silly ideas that came to mind but go to show how random things can get when you open a new communication channel.

3. iPadOS 10

Fine improvements that compound. Nothing major, but a few things to highlight.

3.1. PDFs

We developed self-driving taxis before we created a universal way to interact with PDFs. This honestly blows my mind.

Anyway, the live collaboration, auto-fill, and integration in notes are pretty cool.

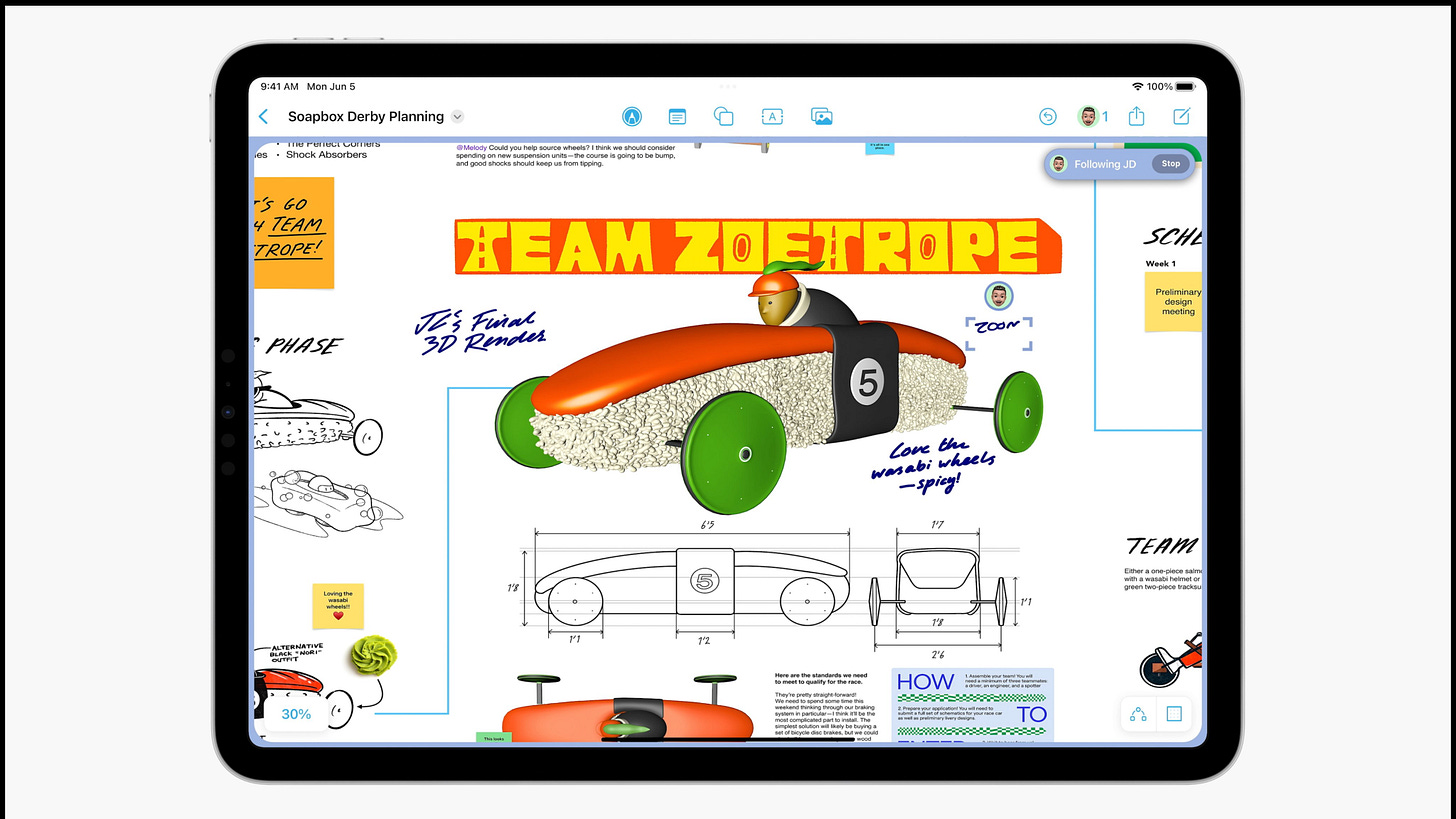

3.2. Freeform

Freeform got some new features that make it collaborate more like Apple. I still don’t know who this product is for or what its end goal is.

Do they want to replace Figma, FigJam, Keynote, the Notes App, or the Notebook? This might very well just be me being uninformed, but right now I don’t understand where the app stands. These new features don’t make it clearer.

If you know, reach out.

4. macOS Sonoma

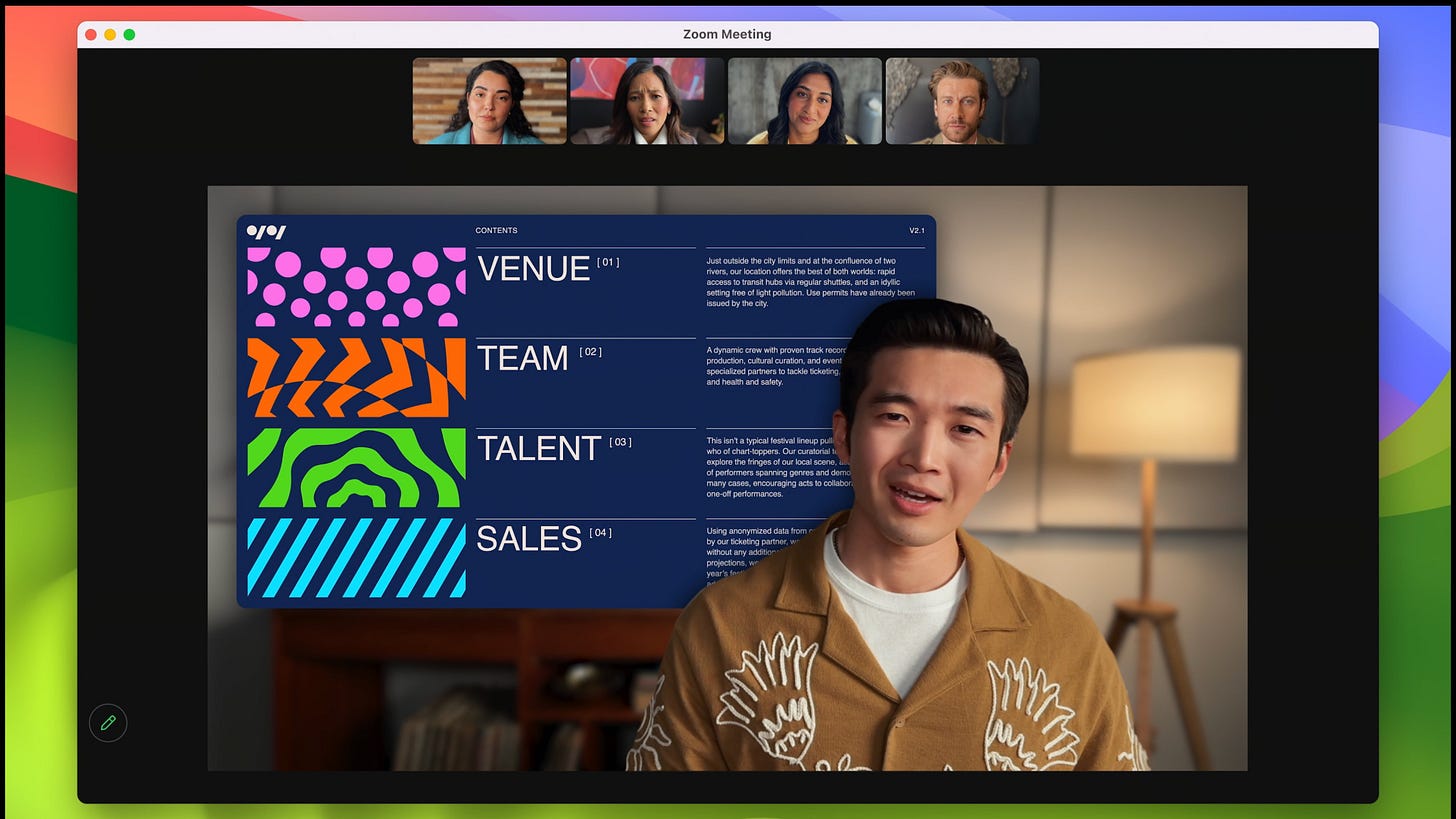

4.1. Video conferencing tools

These look amazing (!) and integrate seamlessly with existing tools with no extra hassle, which is even more amazing!

Can you imagine how bad the Lenovo Thinkpad C-Levels will feel when their new intern with a Macbook Air runs laps around them in remote presentation quality?

Tools like “face over video” or “reactions” are particularly exciting to me because they make communicating so much easier. Gone are the days of streaming an OBS feed on Zoom to be able to have a PiP effect. Gone are the days of making 3 clicks to react to something on Zoom. Also, these new “default options” look so much better!

4.2. Passkey Sharing

This might just be a better way to manage group passwords, but it might also evolve into a seamless transition to socially-verifiable-wallets.

This is a stretch, I know, but imagine if the solution to the crypto problem of seed phrases being lost is to have family members confirm that the wallet is indeed yours (or any other account).

This is the flow I’m picturing:

You create an account on X . com and give Mom and Dad “verification access” to said account. This means that they don’t know the password, but can confirm that the account belongs to you. Years later you forget the password. You can retrieve said password by asking Mom and Dad to issue a new one and send it to you.

(my thinking is still very loose on this one. bla bla bla socially identifiable accounts bla bla bla)

5. Airpods

Usability, usability, usability.

Adaptive audio - if working as advertised - is basically magic. You hear the things you are supposed to hear, and you don’t hear the rest. That’s it.

It is one of those things that has clearly been years in the making and was being slowly tested through spacial audio, noise canceling, transparency, etc.

It is the final boss, the point at which the user has to do nothing and the experience is great.

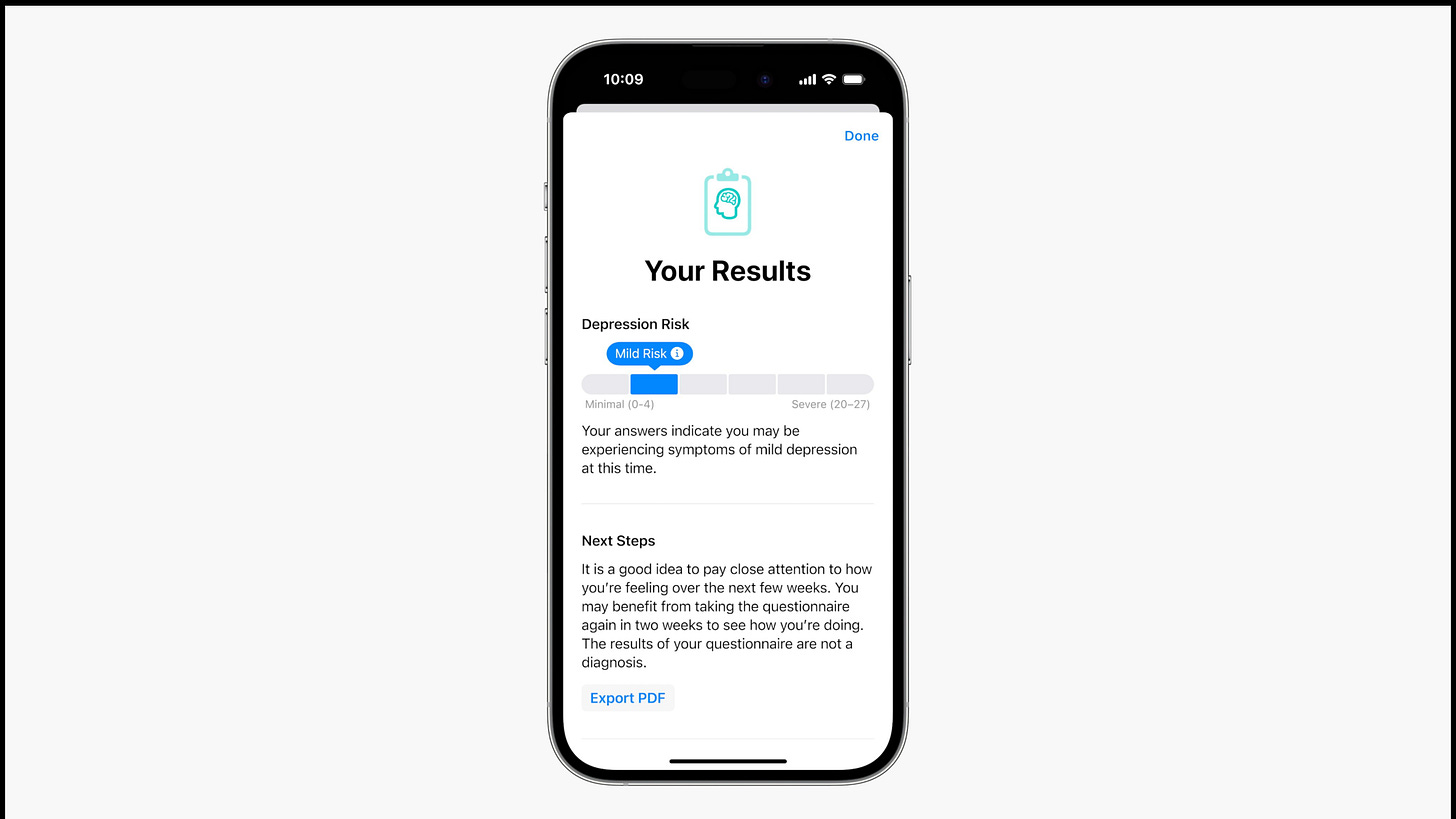

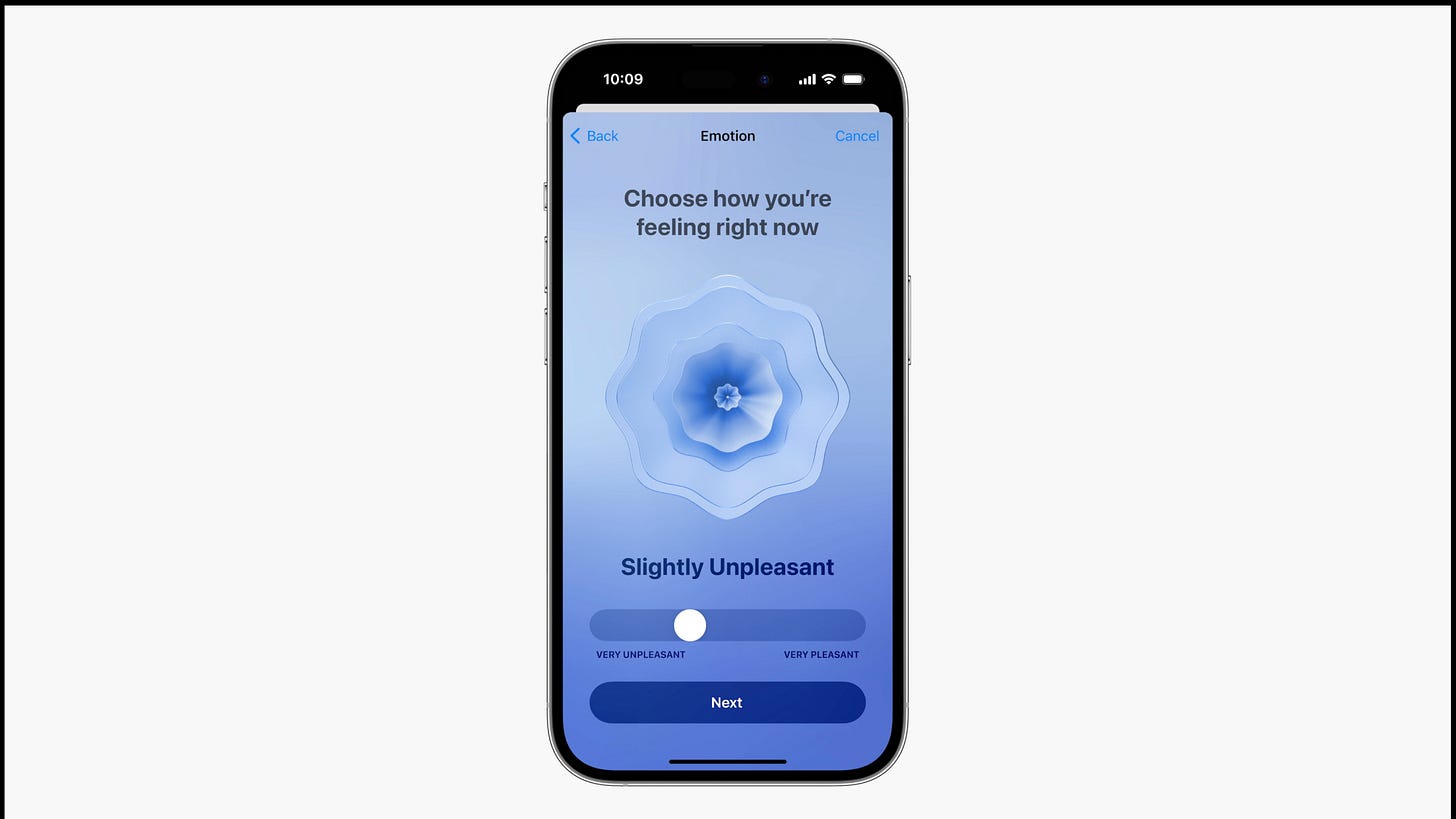

6. Mental Health

If your iPhone knows exactly where you’ve been, who you talked with, how many steps you took, and then you tell it how you feel… what’s left?

This is the final stepping stone in having algorithms that can make you feel a certain way. It won’t be long before we can accurately say “If you want to feel X, just do Y, and Z” at scale! Sorry if I am sounding too much like 1984, but it is a bit scary when you think about it.

Anyway, I am really excited about the emotion visualizations they showed in the demos.

Giving people tools to explore their emotions is extremely impactful and valuable, especially at the scale, only Apple can do. A lot of people go through their lives feeling sad, happy, angry, and nothing else. I am a believer that teaching people to correctly identify their emotions can lead us to a future of more empathy and less numbness. (the design nerd in me is also very curious to see how they visually portray each emotion)

7. Apple Vision Pro

The new paradigm.

7.1. Input model

I am in no way qualified to talk about the input model without testing it, but let me ramble about Hands and Voice (and mind?).

The vision pro is fully controlled by hands, eyes, and voice. This sounds extremely cool and futuristic, but is it? Let’s explore those options:

Hands

This is how you control your computer and phone. Hands move in 3-dimensional space. The less you need to move them, the faster you can execute commands. On a computer keyboard or phone, the area in which you move your hands is pretty small, resulting in fast command input (input is faster on computers because they are designed to use all fingers compared to phones which only use thumps). Because of this, it is hard for me to imagine a scenario where it is more convenient to move your hands in the air to control a digital interface than by simply typing on a keyboard. (this can be a lack of imagination)

Hands also fatigue easily. Notice how the subjects are often resting their hands on their laps in the demos.

It is impressive that there are no remotes. Apple really trusts its array of cameras and Lidars to know what your hands are doing. At first, I thought you would need to use an Apple watch as a remote.

Voice

I don’t believe in voice as a viable control input. Why? Because there are other people near us most of the time. Even if it works magically when we are alone, nobody will get used to using an input model that they can only use when they are alone. However, I said the same thing about voice messages and was completely wrong. Voice is natural to use, especially if the device can properly parse it, but I don’t think the content we interact with is made to be used with voice

Mind

Ok put your conspiracy hats on. This big back side is the perfect place to hide some kind of new electroencephalography cap to read brain activity, right? A man can dream.

7.2. Content

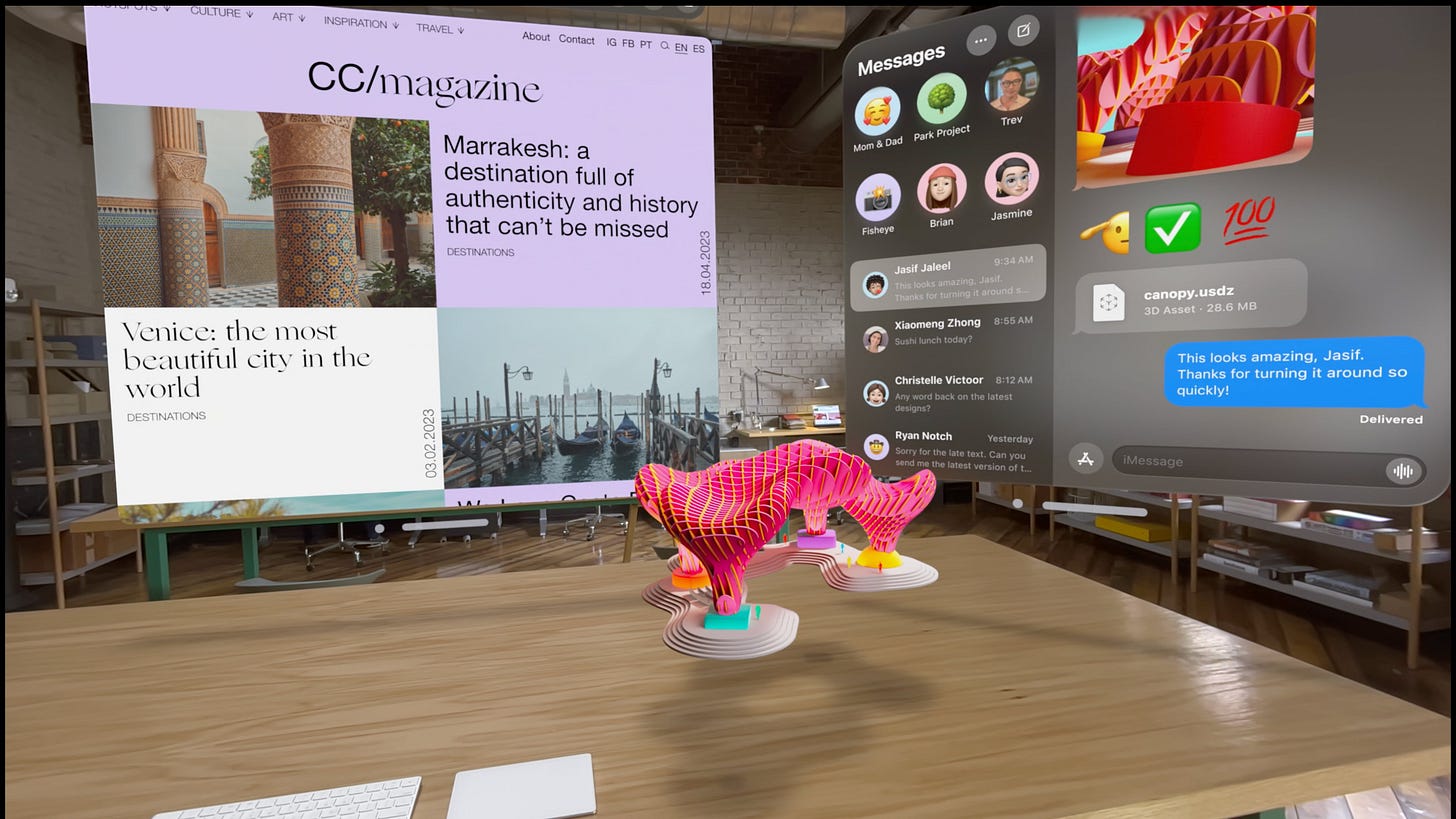

Apple’s sample applications seem… boring?

Don’t get me wrong, I’m sure the experience of watching movies, and doing Facetime calls is amazing, but is that really all we ought to be doing on a whole new device?

It feels like nobody has yet fully grasped the possibilities that XR brings to the table. The electric guitar was invented in the 1940s but nobody really knew what to do with it until Jimi Hendrix showed up.

Most of the real-world application demos could have been taken out of VR videos in 2015.

There must be something more exciting to be done with this new platform than looking at Excel sheets and emails in a new way.

Only when we find these applications, will we be able to look for the perfect input model.

This isn’t to point the finger at Apple as if they haven’t done the homework, but rather to acknowledge that this is a first-generation product and the exciting content discovery part is still ahead.

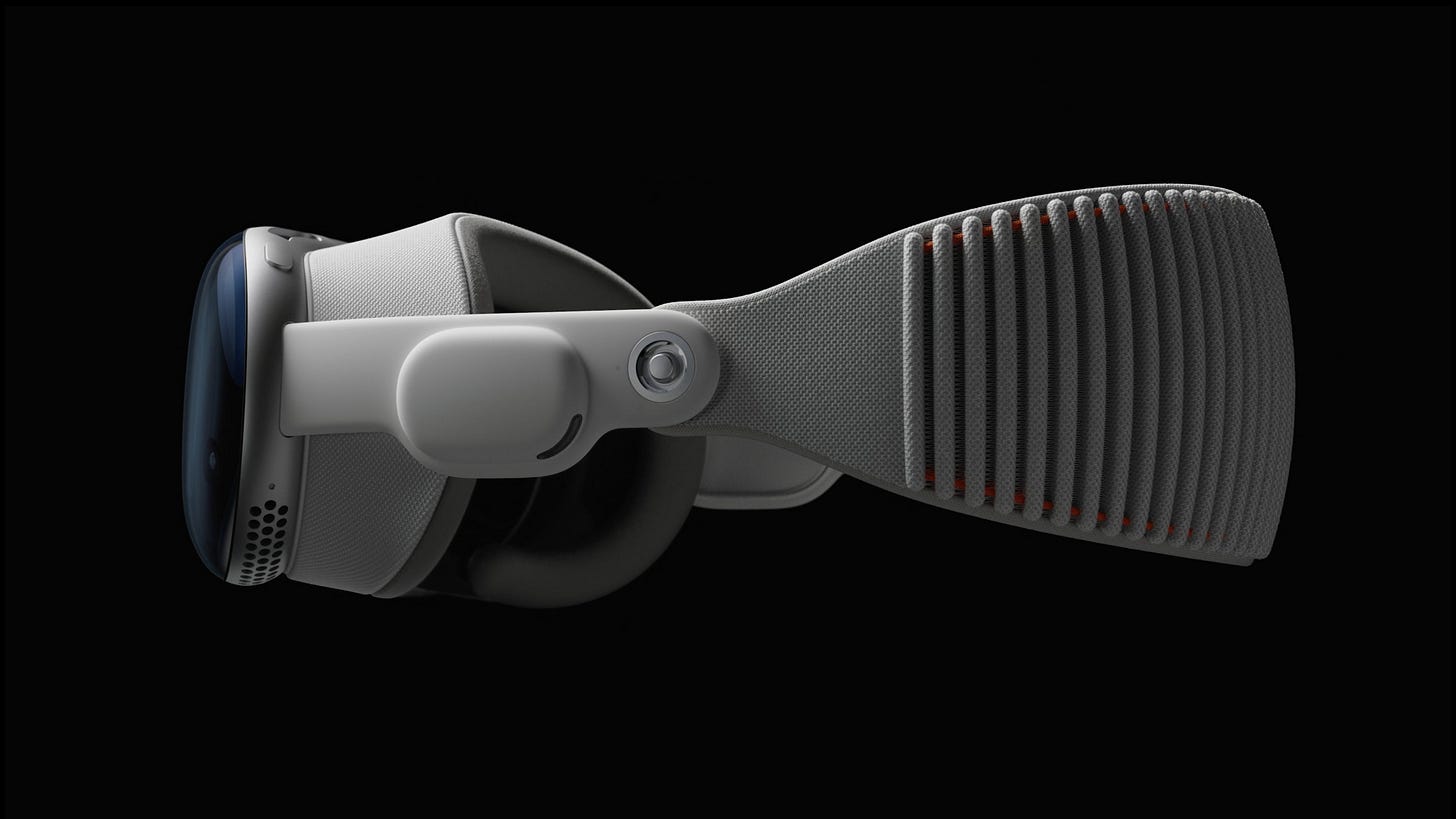

7.3. Coolness / Human Factor

Apple really wanted this headset to feel human, so they put your eyes on a screen. They even made that screen “lenticular” to make sure that people could see perspective changes in your eyes.

Does it look real? Nahhhh. If I’m being honest, the eyes on the screen make it look more dystopic than utopic.

No matter how Apple wants to frame it, these don’t look cool to use and that is a big issue.

The clip where the father looks at their children through the headset while they try to play with him made me particularly sad. It was all so disembodied, so alien, so removed from love.

For as long as the headset is this bulky and tech-looking, I don’t think it will ever be cool to use. And I am a huge nerd. I would already use this thing in a lot of places if I had one.

7.6. Trend line

What I am really excited about in this whole thing is not this headset itself (although it is marvelous) but the trend it accelerated toward XR being a constant presence in our lives.

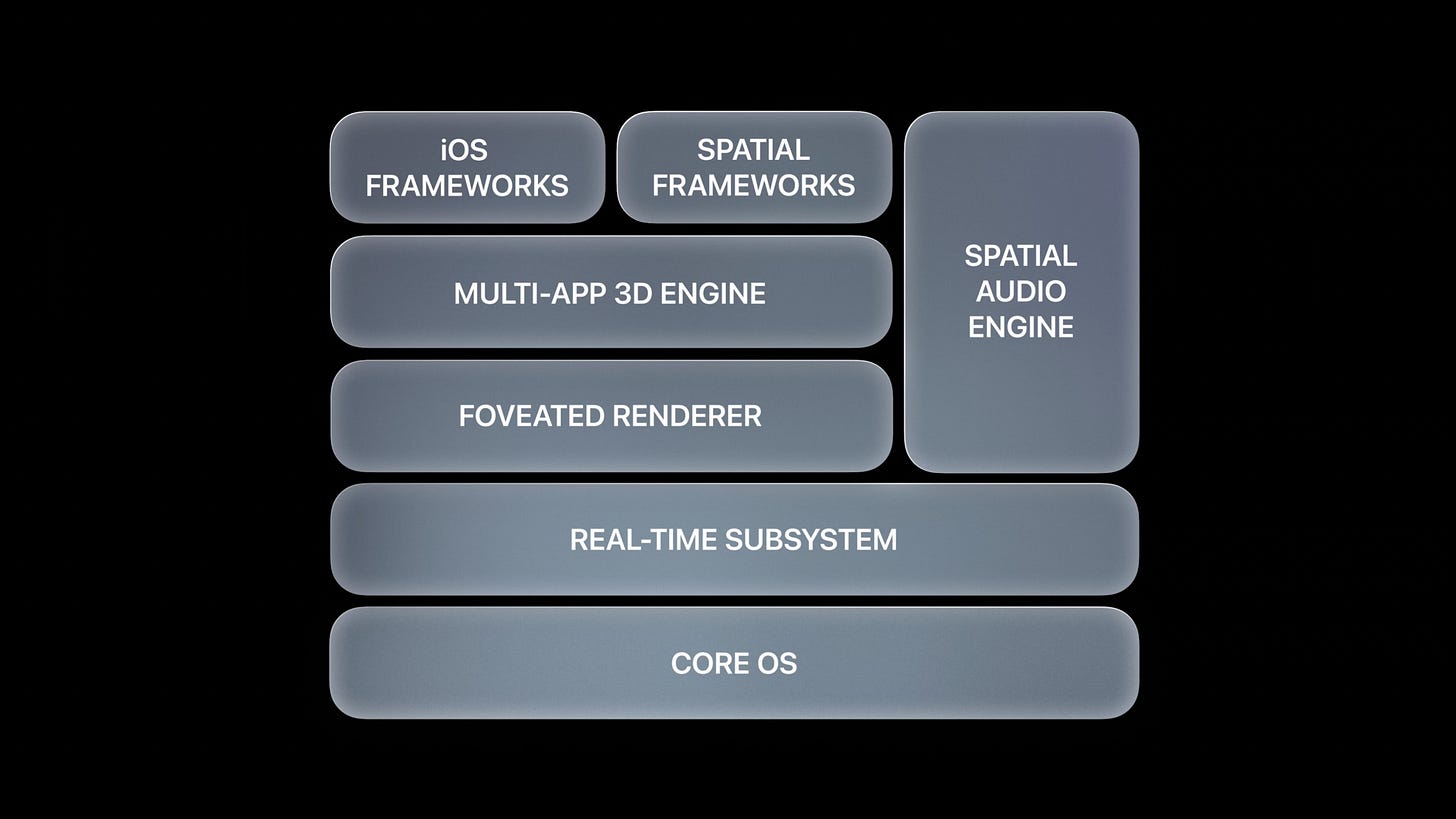

Apple has just laid the foundation for a new OS - visionOS - and now it is up to the creators to explore what is possible within it, just like the iPhone became 100x more interesting with the introduction of the App Store.

This move by the biggest tech company in the world will result in more capital spent in XR, more people working on it, and an overall faster rate of improvement in the industry, which is something I can vouch for.

7.7. Others

I could go on for hours about how magical all of this is.

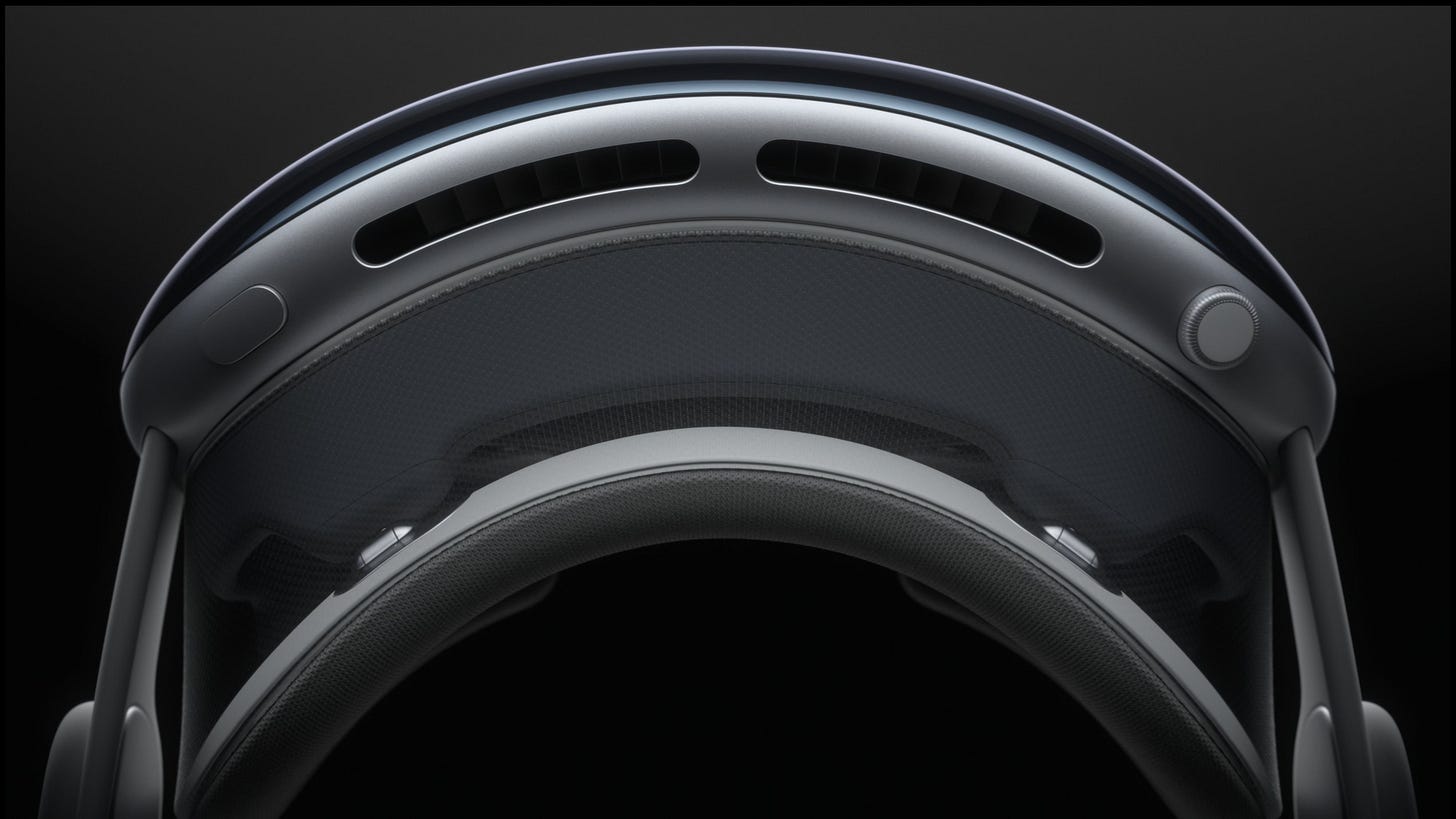

About how this industrial design looks like the perfect baby between Airpods Max, and the Apple Watch.

Or about how good see-through textures look in AR.

Or about how technically impressive dual 4k displays being rendered in real-time by a headset are.

There’s a lot to love and speculate about.

But now it’s 3 am and I have things to do tomorrow.